FEATURES OF LATEST PROTOTYPE: using a blue tooth audio receiver we sent square-wave tones of varying length to communicate between the Javascript program on our web site serverside to the Arduino Mini to communicate step by step directions: left, slight left, right, slight right, and straight. When transitioning to bluetooth - an important aspect of making this a user-testable mobile prototype — we had to use amplifiers in our circuit to increase the gain and make the signal usable again after moving from hardwired data to getting it to the arduino over a thirdparty BlueTooth hack.

This prototype's form factor went through some rapid reshaping - we ended up with press-fit magnets for the enclosure's lid and lasercut layers for a sturdier, customized, more design-conscious prototype. Plan for final form factor: miniaturize to fit completely into handlebars.

FEEBACK from our final class and users at the Winter Show was consistent: this is a practical application and there where many questions about how soon a rider would be alerted to an upcoming turn. People appreciated the secondary feedback of the LEDs. Miniaturizing and unifying the form factor was also a concern. Many people we spoke to wanted to know about safety and whether the system could communicate more detailed Google Maps information about traffic lanes or the best bike route etc. The answers to these kinds of questions as it currently occurs to us seem too complex for the simplicity of the haptic feedback we are envisioning. This is where we feel our next prototype in the programming aspect will help: our True North/True Destination mode in which a destination is treated as a compass bearing and feedback is a general notification as to its relative position to the bike rider's current direction of travel. This leaves the complex safety computing to the bike rider - where it belongs - while giving them basic information for orientation utility. We are not interested in lessening the connection of a rider with their decision making and the environment - rather the guiding principle has been to increase it.

Our vibration motors as our haptic feedback were slightly subtle by the show once we remounted them and implemented our improved circuit. The higher DC voltage/amperage that could have helped wasn't wise so soon before our presentation at the Winter Show and we also did not have time to engineer a good insulation system within the handlebars.

NEXT PROTOTYPES: Some new ideas for next prototype include mounting the motors strategically to prevent vibration splash, and produce more distinct vibrations and enable us to create higher-resolution informational signals through haptic feedback. Maybe multiple localized inlays which receive the vibration could communicate directionality along each handlebar in sweeping waves, etc. We are also curious to explore different types of haptic technology. We have yet to implement the True North user mode but exploring that is a top priority for the next step in the project.

LEARNING: I learned a lot in positive ways: about good teamwork ethics and dynamics, about the importance of early user testing, and about how deep one must go in designing user interaction— particularly in understanding the mental model and put into place various real-world constraints and scenarios with user testing and interviews. User test early and often. Don't worry about a prototype idea being imperfect - just do a version and improve upon or change that. Nothing begets nothing.

Click: BoM

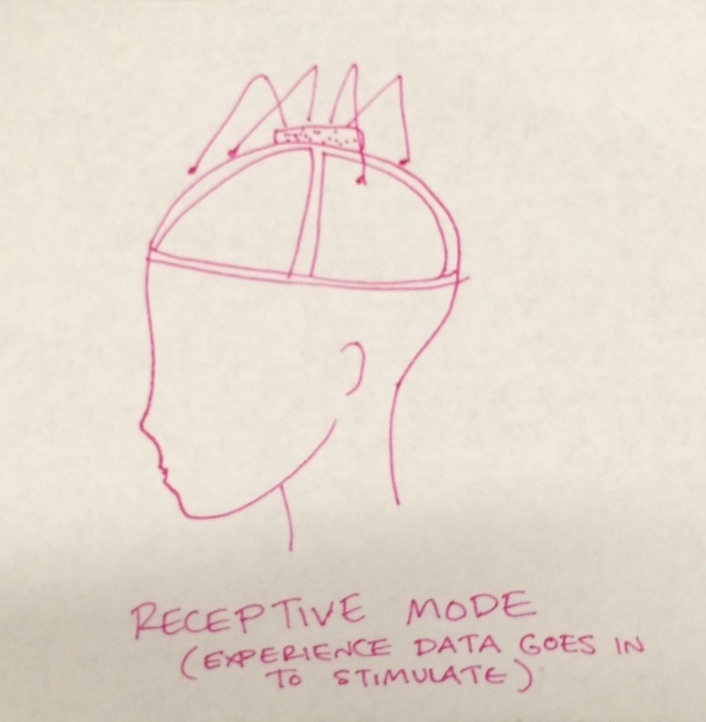

Click: LATEST INTERACTION DIAGRAM

Our final circuit. BlueTooth audio receiver, lithium ion battery, Arduino mini, battery charger, system power switch.

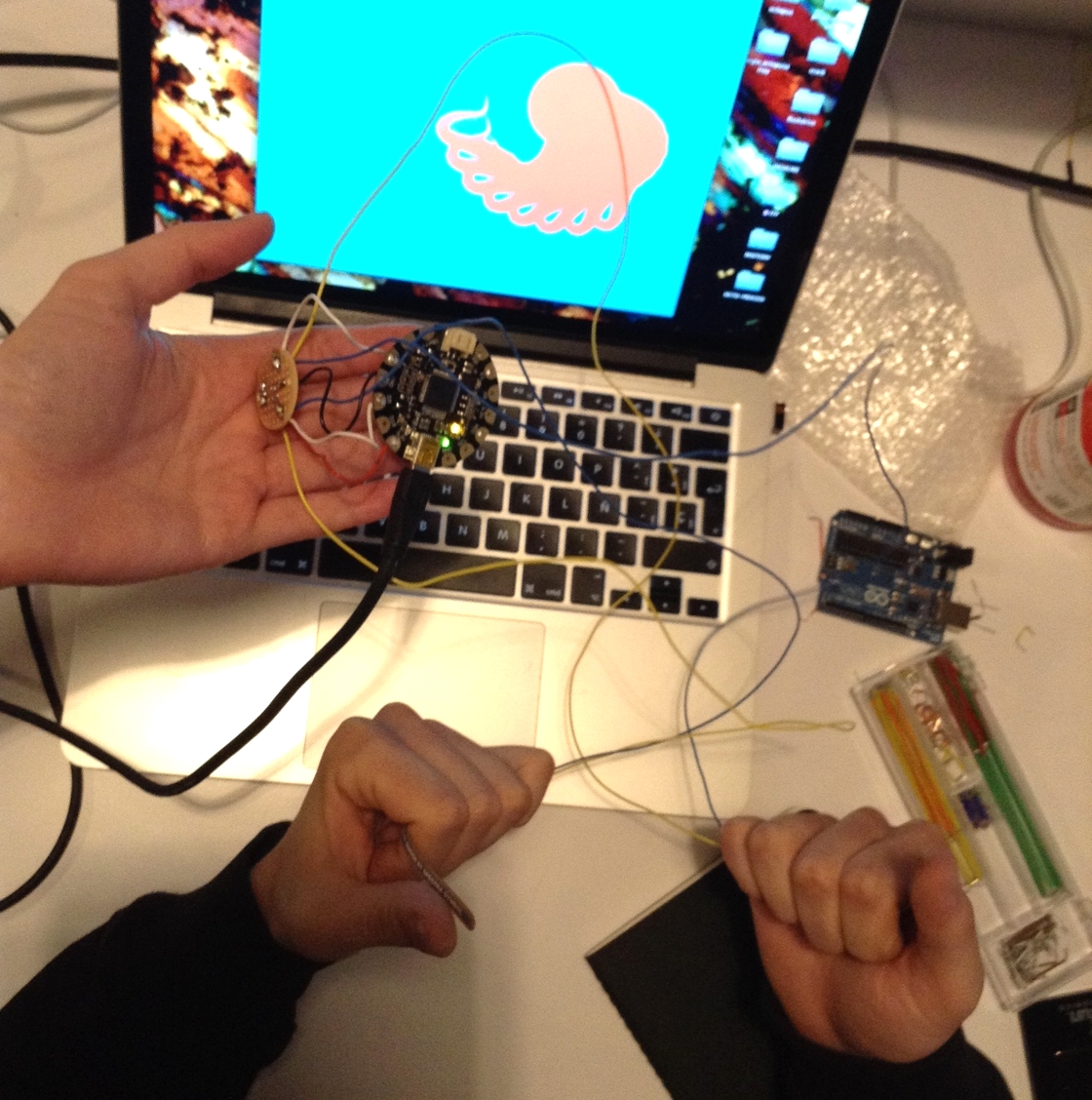

Documentation capturing our first user test! Sam reported that the pre-compiled step-by-step directions and vibration signals worked.

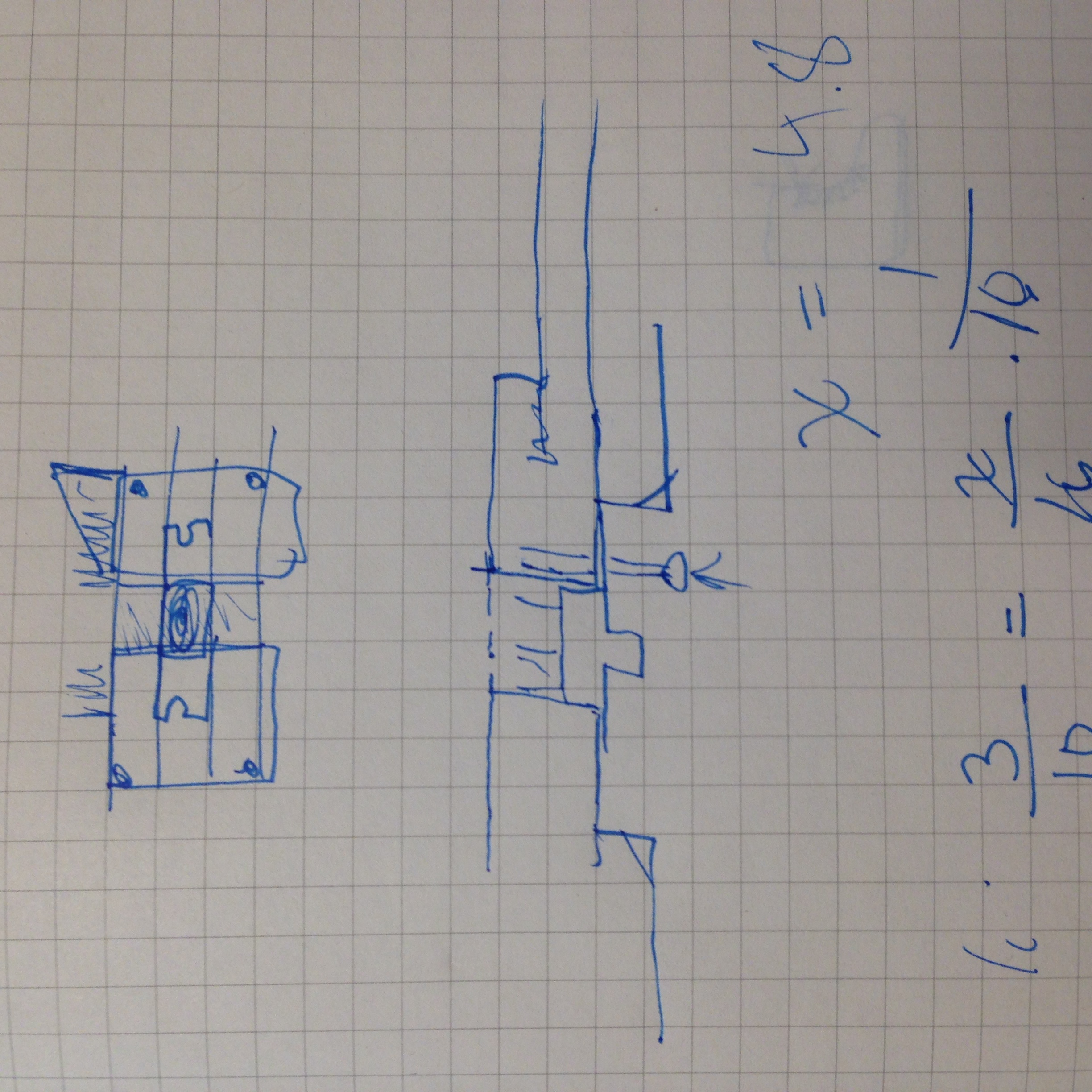

Evolution of the form factor and circuit.

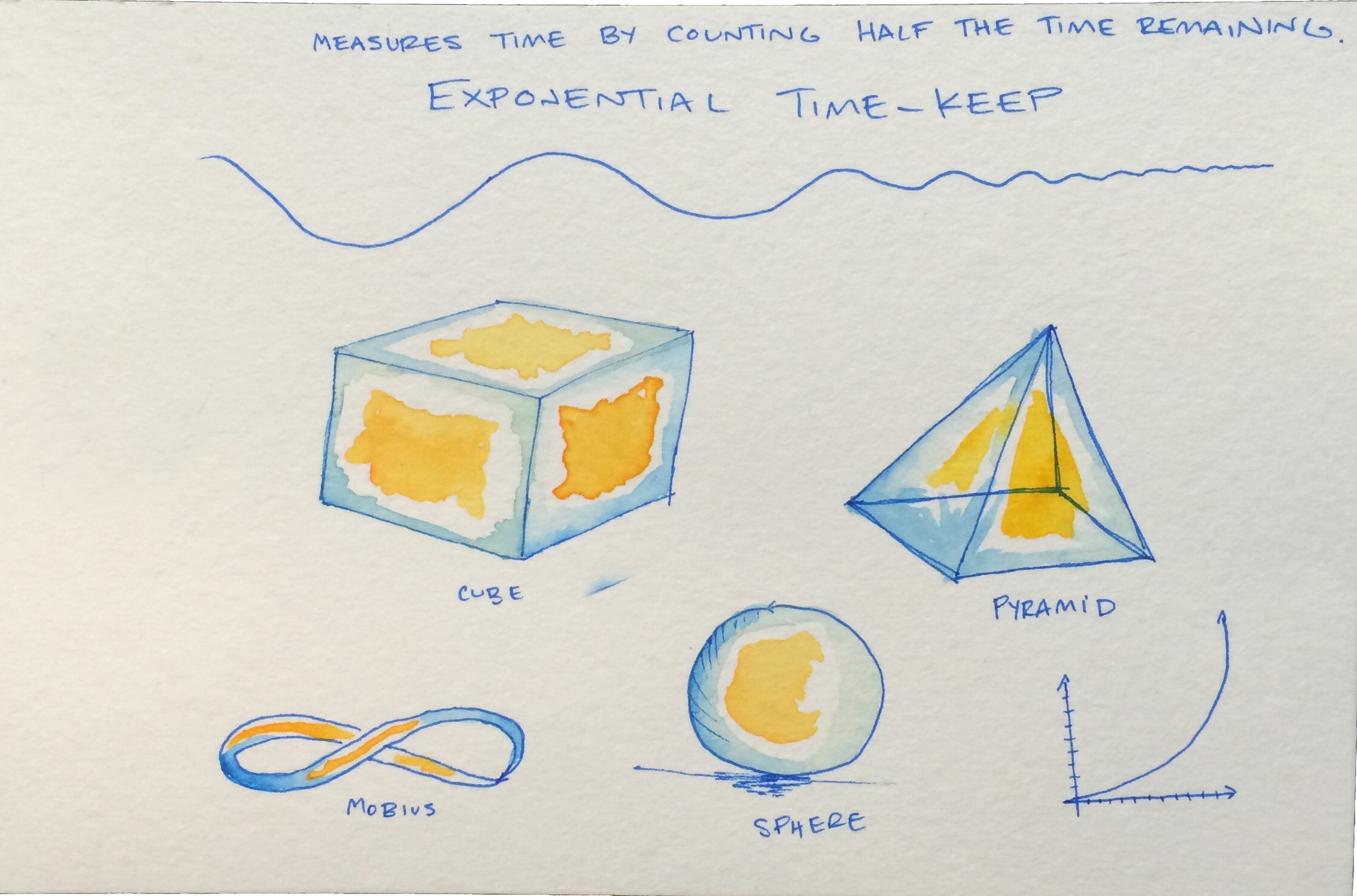

Some attempts at branding.