Read Art and the API and look at some of the art projects that use APIs at Google's Dev Art. Write a blog post that discusses Thorpe's piece and/or one or more DevArt projects and/or other projects that make use of APIs

Coming from a still developing film career, I'm now searching for something more concrete with my new studies than to go through life begging for people with money to consider me and my creativity as legitimate solely in the context of art. So normally I resist it mentally when I hear ITP referred to as an art school. But despite my twice-bitten mistrust of the term "art" I always come back to this kind of thinking, quoted in Jer Thorp's piece, which I do believe in:

"The specific function of modern didactic art has been to show that art does not reside in material entities, but in relations between people and between people and the components of their environment." - Jack Burnham

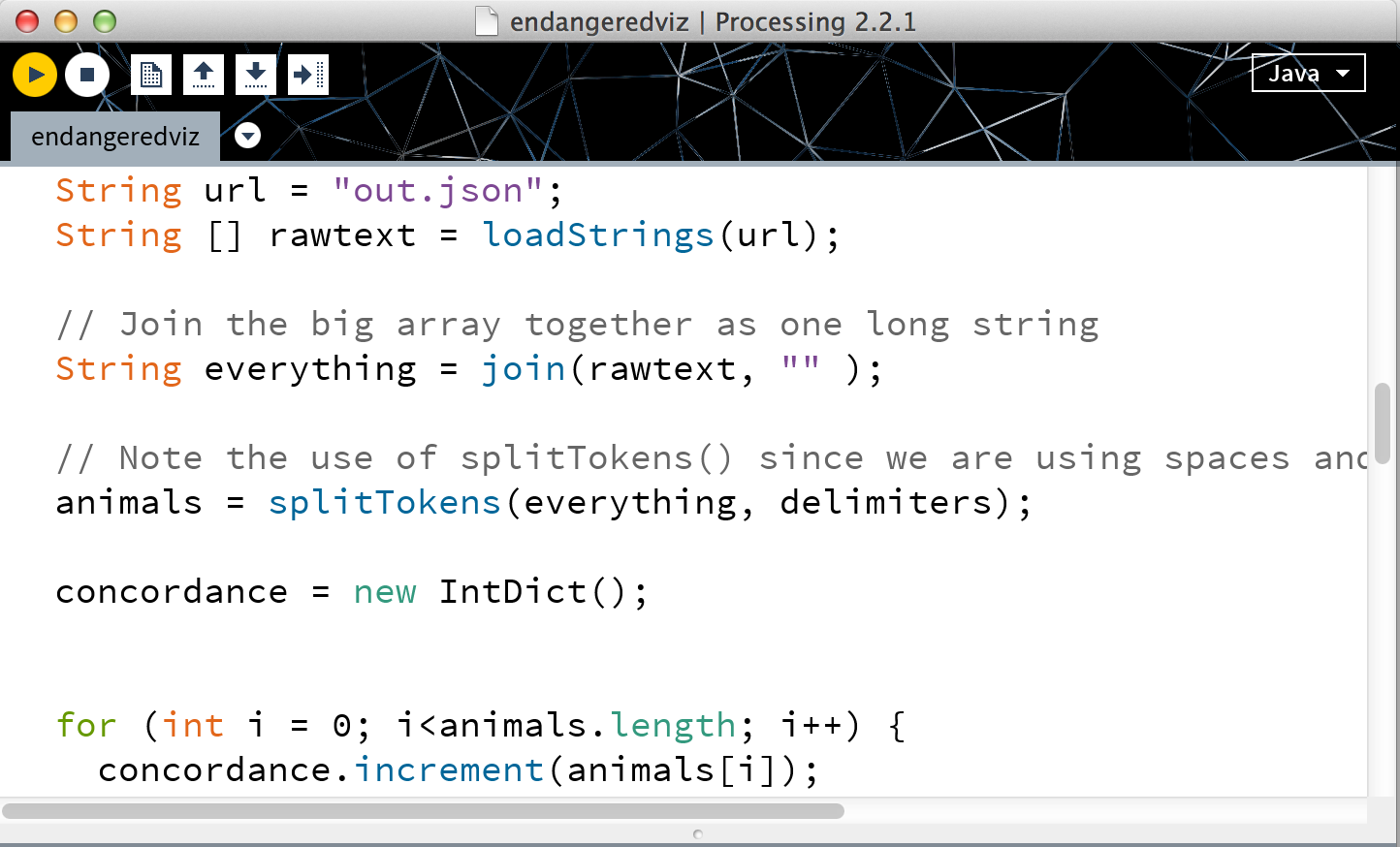

Ideas - making ideas clear through creative association and implied analogies and whatever else is in the tool box. But it's analogy of form, concept, emotion, spatial relationship et cetera that gives artists - and now especially "digital" or "tech" oriented artists the free pass to step in and analyze any topic with factual data to find those connections and then show them to an audience. An . Application Programming Interface is the common language that writes, ecrypts, and unlocks that hall pass.

Starting at ITP marked the first time I'd really heard about APIs, open or not. A commenter on the blog sums up the simple approach to this concept (which strikes me as a newcomer as well):

"I like the focus on the API over something static like a database. It empowers the artist to transform up-to-date and changing data into something equally up-to-date. Much more impacting, much more interesting".

P.S. Looking at the Dronestream twitter feed makes me lamely ask: "What the fuck is our country doing obliterating people with drones?" Stirring up an anthill to feed the machine that makes money burning the ants with a magnifying glass.

Again, the closing quote from Jack Burnham "The significant artist strives to reduce the technical and psychical distance between [their] artistic output and the productive means of society" rings with my vibes.

P.P.S All of the direct API links seem to be broken in this write-up.